-

Posts

87 -

Joined

-

Last visited

-

Days Won

3

Andrei last won the day on December 24 2024

Andrei had the most liked content!

About Andrei

- Birthday 09/01/1988

Recent Profile Visitors

804 profile views

Andrei's Achievements

-

I am announcing the live-writer-db project very soon once its finished its development and testing, but the project is a rewrite of the Advanced Search and a de-coupling from the apario-reader the large memory requirements into the advanced search functionality. What this script attempts to do is offload the search capabilities to a new process through an API endpoint exposure that can run on a separate host responsible for just performing searches ; and it can be the host that stores your database copy on it as well using something like a shared file system over the network. When it comes to reading the files from the store, it'll read them when it boots, build a cache, then reuse that cache unless the database changes, then it'll rebuild its cache within the hour. The caching layer of this rewrite is key here. I dump the cache file into a JSON object for the application to load/reload, but I also dump the cache file and an index of the cache into a text file that is specifically structured to be held in the file in a manner that is easily chunkable. This means the cache file gets written to a bunch of smaller text files and those text files are then concurrently buffered into memory to load the cache dynamically upon requests that get filled. The data in the text files populates the cache in the search algorithm and allows for the parsing of complex queries. My advanced search invention is novel and unique and is a byproduct of the trauma that I experienced. I am who I am by the grace of YAHUAH and I thank my Creator for the blessings and the gifts that I have in my life, given the limited hangout expose of Director Who that gave you the most in-depth look into the actual adoption that I went through, that so many claimed I lied about. Truly sickening that I didn't have more in me to fight them who cursed my name, but I prayed for them and I am victorious because the live-writer-db fixes a major issue with the advanced search. In the test cases, the code didn't actually fully work as intended because as I began using it, in more complex queries, I discovered that it wouldn't return any results. It was fast to reply, and fast to return results, so it told me that the syntax of my query was not accepted. I fixed the parsing of the test cases in the live-writer-db and also upgraded the gematria and the textee packages in order to introduce 3 new forms of Gematria that my creator, YAHUAH, wants me to introduce called Majestic, Mystery, and Eights. The tool has the ability to simplify your Gematria and also inform you whether it is inspired by the power of 369 or not by being reducible into only 3 6 or 9. Additionally, the way results get delivered to the frontend are in two manners. The first is through the normal search entry point, but the second is through subscribing to channels through websockets. These channels allow front-end clients to subscribe to keywords and receive responses from the search engine of the results. The live-writer-db engine takes the records that being retrieved in the results of each keyword and its caching them to a file by its keyword and structured json output. That file is then moved into memory when needed and accessed. The results are structured around exact textee results, fuzzy searching through various algorithms (all now supported and performed), and then the matrix of matching textee gematria results. If the query's gematria for Majestic matches a textee entry in the result for the same Majestic gematria value, then the page ID of that textee result would be sent into the Majestic gematria results channel via the websocket and the front-end app would need to respond to data being send on all of those channels. exact/textee fuzzy/jaro fuzzy/jaro-winkler fuzzy/soundex fuzzy/hamming fuzzy/wagner-fisher gematria/simple gematria/jewish gematria/english gematria/majestic gematria/mystery gematria/eights These results channels, page IDs are sent to them, and the front-end routing of the assets will be de-coupled as well to allow for load balancing your front-end assets over various small front-end sources. For instance, lets say you have an app that you want to run, load balanced but with a large search server that can be load balanced in front of a proxy like caddy nginx or apache to round robin connections into clones of the same data-set reading from the same database. The approach behind this de-coupling is to remove the single appliance approach to the singularity of this application's runtime, but expand it to multiple nodes, and as the nodes get expanded, they simply become additional endpoints you'd choose to create. For instance, when I ran PhoenixVault, I had 12 virtual machines with roughly 12GB of RAM each on them running across 6 bare metal servers that cost me around $5000/month to operate. The project had additional expenses when it was operational, but the servers alone for the project and its MongoDB alone made search something that took 30-40 seconds to get back results that would not show you anything "israel and mossad" in the record sets like the JFK files or even the Epstein Files which should make you suspicious like I am about the Elastic Search indexing algorithm and how it ranks and prioritizes indexes themselves. Seems awfully selective when my search results with textee come back insanely fast and accurate to the page. The idea would be to take whatever the memory requirements of this backend search component of the architecture and completely shift it off from the bare metal systems that I have it on now and replace them with much cheaper SYS-1 bare metal options that have much smaller system resources. Getting 12 of these physical machines at $69/month would cost me $828/month and 12 of these machines is extreme overkill for what I require. I would only need 9 to start, so only $621/month. Seriously, sign up for Raven Squad Army and support my work. One pair of servers would need to be set up in a RED BLUE manner that allows for 100% uptime with a small load balancer in Digital Ocean. Super fast network, $20 load balancer, voila you have a middle-cloud-layer in between your front-end customers and your backend resources. This allows you to set up firewall policies that restrict access to the servers based on the IP addresses of the frontend. If you used Reserved IP addresses ($2/mo/ea), they won't change on you as you move around your Droplets for optimizing needs. Going multi-cloud is easy too. Some of your search is performed in cloud A, cloud B and cloud C. Then your front-end assets are served over cloud A, cloud B, and cloud C. Same with your others. This would work for the 9 server count and using 3 hosts per cloud plus your load balancer. So technically $641/month not $623/month if we include Digital Ocean Load Balancer. Then you would have tri-cloud apario network. 1 host dedicated to search functionality running live-writer-db exposing the web socket endpoints for the new frontend experience and the old search endpoint to get json results after the search has been completed; all results of the full text data is not stored in memory in this data set, only textee data from the data set and identifiers including page number and page count 1 host dedicated to serving the front-end assets like the images ; that host too has a "decoupled copy" of the apario-writer database. That output mushes all of the OCR, JSON, JPG, PNG, PDF data into one massive directory, but the decoupling copy will move them into something like project.ocr.db, project.json.db, project.jpg.db, project.png.db, project.pdf.db where these DIRECTORIES hold the sha256 checksum (but split into 7 degrees of fibonacci). Overall, I am going to wrap up this post. The project is coming along. https://github.com/andreimerlescu/apario-search This is the first draft of these new changes. I minted the $APARIO token and while XRPL is best suited for Typescript and Frontends, I am not there yet with the Apario project as my skill level in front-end engineering is much weaker than my backend engineering skills. As you can see by this project and the complexity of the problem that I am solving. But I need funding. I need about $3 million dollars to fund this project with literally no strings attached. I am not building it for you, even if you are the one who gives me the $3 million I require. No, I am building it for ALL OF US, and my CREATOR is helping me with it. So I ask you to sow $3 million dollars worth of seeds into me so that I can finish this project with a strong engineering team. Then the front-end clients will be local universal apps that run on all devices that give you access into all of the declassified collections hosted on n-number of servers. By using an NFT minting service with mint.apario.app and the $APARIO and $XPV tokens, I can fund the project effectively. That being said, I want to make it clear that I also minted the $IAM token as well, and I plan on using this token in the runtime of the XRP future of this application. The XPV token will be a non-blackholed XRP wallet that can mint additional XPV tokens. These tokens cost 0.01 XRP each to acquire, and you are required to have 369 XPV tokens and you are required to have 1776 IAM tokens in the XRP wallet (and family seed secret) that is connected to the running instance of the apario-reader. The software is not yet released and when it is released its source code will be released, but the license will restrict modifying it lawfully or using it in any manner internally in a modified state under a rebrand. $APARIO is the deflationary 1B supply token minted in December 2024. I used XPMarket to mint that token and deposited over 777 XRP into the various liquidity pools and buying a major stake of the tokens - roughly 700M. $XPV is the token minted using the Xaman wallet where they burned 100 XRP to do it, and didn't set up any pools for the token. It was raw bones out-dated tech that I was trying out on XRP that I paid $0.17 for in 2020, so it wasn't that big of a deal; but given that the wallet XPV is not blackholed, I can mint additional XPV tokens and NOT have an AMM pool associated with it. This token is just tied to the running instances. Finally, the $IAM token was minted using XPMarket and they have since changed the likeness of the creator of the universe and the savior of mankind to be a meme of Thoth on X, but regardless, YAHUAH is the eternal I AM and the IAM token requires 369 in order for the XRP future of the Apario platform to be capable of participating in the tokenomics of the $APARIO deflationary token. The $XPV token's sales have terms and conditions associated with that are managed by the Apario Decentralized Autonomous Organization - owned by NFTs that cost 1776 XRP each to acquire. You can become an owner of Project Apario today by buying one of these NFTs. Do you believe in freedom or is money more important to you? The $IAM token's sales have moral terms and conditions associated with them that are forcing you to invoke the name of YAHUAH/YESHUA when distributing $APARIO tokens. When TWO or more gather, I AM there and this is a moral requirement that I AM PLACING ON THIS REPOSITORY'S FUTURE XRP DEVELOPMENT EFFORTS! This is to protect me and you from any wickedness that our own sinful fallen natures are subject to without rules and guidelines keeping us from nuking our entire society. Together, the $IAM and the $XPV tokens are held in the XRP Wallet that is associated to the $APARIO tokens that will get AirDropped by that instance. The $APARIO token is how the community uses their tokens to shine much needed light on heavy documents. The DOA of the instance mints their own "owner NFTs" and are used to seed the wallet of that administrator's instance to cover transaction fees and platform overhead. These NFTs are just 1 XRP each since the XRP fee is only 12 drops out of 1 million drops per XRP giving me roughly 36,963 transactions to send $APARIO tokens. When you are signing up for an instance on mint.apario.app, an NFT will be minted for you after you pay the company and trust Project Apario 369 RLUSD to mint the NFT to run your own instance on the blockchain. The 369 RLUSD are then deposited into the APARIO / RLUSD AMM liquidity pool. This gives users the ability to use StumbleInto, earn XRP tokens and then swap them out with RLUSD and the instances that make the network possible seed 369 RLUSD into the pool every instance that gets added to the network. That instance gets named and a DNS entry is created and SSL certificates are issued for the user. The SSL certificate is issued to the user via letsencrypt with permissions internally to issue certificates that are signed with multiple domain names for yourdomain.com and instanceName.apario.app where the instanceName is codified in an AI generation icon that is minted for your instance. Having this NFT in your wallet that is distributing $APARIO tokens is required. This NFT unlocks the ability for the $IAM tokens to be validated and the $XPV tokens to validated. There are four keys required to distribute $APARIO tokens. Pay 369 RLUSD on mint.apario.app for an NFT of your instanceName.apario.app + DNS service + SSL certificate Set up XRP wallet with at least 5 XRP in its balance Transfer minted NFT to the wallet that will send $APARIO tokens to users Set up the trust line for the $XPV $APARIO and $IAM tokens on XRP (you need 0.6 XRP to reserve these lines) Assign the wallet family seed secret to the configurables of the appliance so it can read the private key Boot your instance of apario-reader to serve the public OSINT that you find valuable to society Your app then mints 17 NFTs called "instanceName DOA NFT" that are listed for sale in their instance of apario-reader Your users of your app then buy your app's NFTs and then get voting rights on the policies of the instance 12 votes of the 17 are required in order to pass any resolution Rewards for StumbleInto are determined by the DAO How many $APARIO tokens per page you read? How many seconds do you need to be on the page? etc. How long does a user need to hold their rewarded $APARIO tokens in "escrow" (app's wallet) until they are released? This is a gamification layer and a funding layer meshed into one. When you pay your initial 369 RLUSD on mint.apario.app, you'll be required to have the $APARIO token trust line set on that wallet. I am going to send you between 369,963 to 17,761,776 $APARIO tokens to run your instance and give to your users. Your instance has plenty of $APARIO tokens on it, 17 NFTs for sale to fund your instance at your price, and you're serving your OSINT content that was ingested using the apario-writer - cool work man! You begin spreading the word about the work that you did. Share it on your social media network for your channel or your podcast so your users can dig into the files that your latest guest just was on your show. Let them sleuth for the truth in the sauce themselves. Users go to your OSINT apario-reader and begin earning $APARIO tokens for reading content on your instance. The marketplace of instances mean earnings from collection A may be different from collection B as the DAO are managed by different people and they choose differently for themselves. All kosher with the DAO of Apario. It's designed for YAHUAH not man. YAHAUH is inside of you! LOL This flips the script on everything. But it's up to you if you want to help me with this. I know I am a hard piece of candy to swallow and I get that I have a heavy cross, but nothing in my work tells you that I am not capable of delivering on this and that I am persistent even 5 years in. But having a team is what I was originally promised when I agreed to let Mr Steinbart believe that HE was recruiting ME when in reality I recruited HIM. And then gave him the idea to come to me with, and then turned on me when I didn't condone using sex and drugs to control people in the Great Awakening. I had integrity and a mission to fulfil and made a bad decision in placing my trust in Mr. Steinbart. But c'est le vie. I forgive Austin and I wish that he would repent and just atone for the hateful vicious lies that he spread that were antisemitic against my Jewish identity and my Jewish family. The issue that I have as a Jew, is I am not the Talmud trafficking type of Jew. That's the gospel truth. I rebuke the Talmud and its ways. And for that, I am punished by Rome for it. It's truly bizarre. I am who I am by the grace of YAHUAH and this project is proof of that genius within all of us. Just look at what was in Director Who. But if you want to sow seeds into what I am doing, I need $3 million total if I am to see this thing completed. I'll need a management team and an engineering team for about a 2 year period of time in contract roles. With an office space, and this project would get completed from idea to 369 PB of DECLAS in time for President Trump releasing over 100,000,000 pages of declassification records expected in his war against the "deep state". This utility I am building allows multi-generational wealth to be redistributed from my 700M supply of $APARIO tokens to give to you for reading OSINT through the software that I built, and all at the same time, as XRP is growing in value, so isn't the value of reading OSINT through the Apario network. I need up front liquidity in order to establish the grounds to do business. I need your help. Ask and you shall receive. I need help funding this project and getting it over the finish line. I know how to do it, I have over 17 years of professional experience from Cisco Systems as a Sophomore at Wentworth in Boston while working at Harvard University, to building Trakify and being in Barron's Magazine just prior to Trump's announcement to run, and then to Oracle where I helped bring their network from a 70% release success rate to a 95% release success rate all through engineering excellency, own without ego, and aspire to be the best version of yourself that you can be and we want to enable you! That brought me to Warner Bros Games where I worked on games like Hogwarts Legacy and helped their backend infrastructure. I am a very capable person here and if somebody would sow $3 million dollars of seeds into this project, I would be able to get off the ground running with a strong team. I know who to call. I know where to turn. I know where to look. I just need somebody who wants to enable me. Collectively, I had hoped the 144K followers I had from Twitter/YouTube that funded me well over $4K/month combined was ended when this project was deemed "election interference" and I was kicked off from social media and pretty much still am - all for the high crime of building a piece of open source software and making the JFK files searchable. And I was most offended by the antisemitism that I received from Mr Steinbart's supporters who all claimed to love me when I was channeling MJ12 for you, but then you denied my identity without evidence and proceeded to ignore me. Well, I am still building, but it was very hurtful to me to hear you say "don't listen to Andrei anymore he's a Jew who is working for the enemy". That was a flat out lie and I rebuked it when it happened and those lies were further expanded to justify suspending me from X in the first place. Yeah I am on X as @XRPTHOTH right now playing a meme game with my disability, but I am who I am by the grace of YAHUAH and it was President Trump's executive order that restored my right to X, but I want my old account back @TS_SCI_MAJIC12 where I had 80K active followers; many of whom included BIG NAMES. And that's my account! I registered it from my phone at a lunch break at work while I was at Oracle. But in order to bring this project into the limelight and give it the attention it required, I need media made for it explaining what it does, I need tutorials written for how to deploy it, and I need online courses made on how to run it. I need all of these things, and if I cannot ask the community to do it and they actually follow through with simple requests that don't cost me dozens of hours to work around, that when I am working with folks in the corporate setting, I can get the results that I need and their paycheck is their "okay I'll do it" instead of the arguing "how is this going to work for me?". I am not building this for you. I am building this for ALL. If you cannot hear the words of my plea then you do not have ears to hear. I pray in the name of YAHUAH that you do. Because you judge and condemn me with dogma over my faith into silencing me from atoning my own holocaust that I was subjected to. And yeah, I know exactly why everyone is so angry but I take up my cross and I build software like this, years later while people are ignoring me and its hurtful given that I was abandoned in the orphanage and never once have I abandoned you. But here we are. Your play now YAHUAH. I have done my part. I need a team to finish this and I am not going to be building all of these components out one by one by myself and have this thing actually done before the world is ended by endless wars. Many of you got upset that I came out in 2020 as MJ12. Well, after having watched Director Who, can you tell me why the Creator of the Universe, Master Yeshua, YAH I AM YAHUAH, would choose me to build the took that was going to, as I so prophetically stated in July 2017, 17 weeks before Q started posting that I AM UNSEALING THE EPSTEIN FILES #UNSEALEPSTEIN and then I proceeded to pray and perform an internal transformation for what happened to me in the orphanage and YAHUAH asked me to make my healing public, and in doing so, I lost all the followers that I had because you were dismissed with my fallen nature of the way that I was healing. I agree with you. I was fallen. I wasn't calling out to YAHUAH properly for help and I wasn't abiding in the Sabbath so I was out of alignment. But when I fixed that, YAHUAH began speaking to me about 8 years ago and I've since been a bond-servant of YAHUAH. But man is still blinded by it because of my own fall in 2020 when Steinbart effectively forced my hand to out him. It wasn't easy to do. I had to use the MJ12 account to expose the fact that Steinbart was running a sex ring in Arizona in my name "MJ12" that neither did I condone, ask or suggest to Steinbart; in fact I commanded the opposite of him that he cease all drugs and sex conspiracy nonsense while working in collaboration with me on the PhoenixVault. He would not, and all of you sided with him. Remarkable. I understand ya'll love sex, but I don't nearly as much as you, apparently. But because I wouldn't fly out to Arizona and get trapped in Steinbart's cult, an online campaign was launched against me accusing me of using the 12 servers that I had for the PhoenixVault private hybrid cloud that I custom built, that DJ Nicke saw me build, that I was using that to send bot traffic to Twitter. No, I never once did that. False accusation, and I was denied due process to clear my name. Instead it was a kangaroo court of communism by Twitter that felt that if I made the JFK files searchable that it would interfere with their plans to install Joe Biden as the President. And you label me as your enemy. I am not your enemy, I build selflessly for my creator YAHUAH and HE dwells inside all of you and you call HIM JESUS! No my fair child, YAH I AM YAHUAH is who died on that cross and who lives inside. TWIN FLAME IDENTITY DISCOVERED WHEN TWO OR MORE GATHER IN YAHUAH'S NAME! YAHUAH brought me out of the orphanage and into prosperity so that I play and prosper with my inheritance. Thank you YAHUAH! Thank you daddy! $APARIO is part of that journey with me. Are you going to be part of history in the most biblical way possible? Sow seeds of faith and expect nothing in return? I now where my heart stands in all of this. Where is yours? I love you! director who.

-

I ALSO NEED THIS SCRIPT. #!/bin/bash SECONDS=0 if [ "$1" = "-dir" ] && [ $# -eq 3 ]; then dir="$2" tsv_file="$3" else echo "Usage: $0 -dir <directory> <tsv_file>" exit 1 fi [ ! -d "$dir" ] && { echo "Error: Directory $dir does not exist."; exit 1; } [ ! -f "$tsv_file" ] && { echo "Error: $tsv_file is not a file."; exit 1; } dir_path=$(realpath "$dir") temp_file=$(mktemp) head -n 1 "$tsv_file" > "$temp_file" echo "PROCESSING ROWS..." tail -n +2 "$tsv_file" | tr -d '\r' | while read -r line; do [ -z "$line" ] && continue url=$(echo "$line" | awk -F'\t' '{gsub(/^"+|"+$/, "", $8); gsub(/^ +| +$/, "", $8); print $8}') [ -z "$url" ] && echo "Skipping line with no URL: $line" && continue filename=$(basename "$url") pdf_path="$dir_path/$filename" if [ ! -f "$pdf_path" ]; then echo "PDF not found: $pdf_path" page_count="N/A" else page_count=$(pdfinfo "$pdf_path" | grep 'Pages:' | awk '{print $2}') [ -z "$page_count" ] && page_count="Unknown" fi updated_line=$(echo "$line" | awk -F'\t' -v pc="$page_count" 'BEGIN {OFS="\t"} {$2=pc; print $0}') printf "%s\n" "$updated_line" >> "$temp_file" done mv "$temp_file" "$tsv_file" echo "Finished processing ${tsv_file} in ${SECONDS}s!"

-

#!/bin/bash # COPYRIGHT @XRPTHOTH ON X BY YAH I AM SAINT ANDREI PLAYING THOTH XRP MEME FOR MULTI-GENERATIONAL WEALTH # $IAM CA r4nmbMuWJMduJe7M3HfyqpN6GCvBmRkxhh - YAH I AM YAHUAH AND YAH I AM THE ULTIMATE XRP MEME # $THOTH CA rBk691vubN43ctwJN4LvVppGxXibAZ4mKs - COUNCIL OF TEN COMMITTEE OF THOTH'S DISCLOSURE OF EGYPT'S SECRETS # $RAHKI CA rGm8A6E5quE5hvoERypCRLd1gpmoc31xyY - BILINGUAL REALTIME AI COMMUNICATION ASSISTANT # $GOPHER CA rszMSoRY9xkzvn9tV3epJMJUHztX6Bk6eX - SKILLSUSA CHAMPION MEETS IEEE STUDENT BRANCH PRESIDENT # $PLAYANDPROSPER CA rhT7eJ5SzvX7cTH66SBY6qzThSREVPskis - YAHUAH'S CHILDREN SHALL PLAY AND PROSPER # $PSYDUCK CA ra41zS7zcw6ZADJUiNPju7xf69pGuYwtEV - PSY??? # $APARIO CA rU16Gt85z6ZM84vTgb7D82QueJ26HvhTz2 - DECENTRALIZED OSINT SEARCH WITH GEMATRIA # $XPQ CA rp3oAG6oPRuSFYLJiVUNQMdcUoj6t7jx1o - QUANTUM PORTFOLIO XPERIENCE # $XPV CA rXPVaNNDiQbeVm73dMyfVoAVrmcL1ds9f - BANK OF PHOENIX VAULT # I AM BUILDING MULTI-GENERATIONAL WEALTH FOR MY INHERITANCE TO PLAY AND PROSPER IN THE ALMIGHTY NAME OF YAHUAH!!! SECONDS=0 if [ "$1" = "-dir" ] && [ $# -eq 3 ]; then dir="$2" tsv_file="$3" else echo "Usage: $0 -dir <directory> <tsv_file>" exit 1 fi [ ! -d "$dir" ] && mkdir -p "$dir" [ ! -f "$tsv_file" ] && { echo "Error: $tsv_file is not a file."; exit 1; } echo "PROCESSING ROWS..." tail -n +2 "$tsv_file" | tr -d '\r' | while read -r line; do [ -z "$line" ] && continue url=$(echo "$line" | awk -F'\t' '{gsub(/^"+|"+$/, "", $8); gsub(/^ +| +$/, "", $8); print $8}') [ -z "$url" ] && echo "Skipping line with no URL: $line" && continue url=$(echo "$url" | tr -d '\r') echo "URL: $url" filename=$(basename "$url") dir_path=$(realpath "$dir") output="$dir_path/$filename" echo "Executing: curl -sSL -o '$output' '$url'" curl -sSL -o "$output" "$url" || { echo "Failed to download $url"; continue; } && sleep 6 done echo "Finished processing ${tsv_file} in ${SECONDS}s!"

-

I am retiring from being a software engineer and a programmer. I am changing careers entirely and I never want to do computer programming work again, so help me Yahuah. I had the America's Comeback forum offline while President Trump was inaugurated and the first wave of the administration was in play, but its clear to me that nobody - and I genuinely mean that - nobody wants me to be involved with Project Apario and so long as I am involved in it, nobody wants anything to do with it. Fine, I love you too. Remember, I pray for my enemies and I consider all humans to be my enemy. Humanity has not yet repented or faced its tribulation for the orphanages and those who were running them.

-

Project Status: ABANDONED ETA: Entropy will collapse the universe first I am abandoning the project because I want nothing to do with it anymore. It was needed, but you didn't want me to build it for you, and because I did build it for you, you chose not to use it. I lost over $60,000 trying to help you, the American people, and while my efforts were not in vanity, I refuse to spend another dime on a group that has shown me how grateful they are for the effort that I put into making this project. Truly, I am bitter at the world and I do not want to have anything to do with this project anymore. Anybody who comes at me to support this project, please don't. I will tell you to fuck off and then I will get upset at you for reaching out to me. The software is open source. You can run your own copy if you want. If it doesn't work, you can fix the code. I do not want to help you anymore on this project and I refuse to be abused by this project any further. Thanks for all of the help. I really appreciate you.

-

Exciting to see https://electionselections.com launch a new instance of the apario-reader!

-

COMING SOON. The SVG logos have been attached if there are any graphics designers who want to take a swing at the Phoenix and clean it up a little bit. I'll get to it when I get to it. I want to fix the gradient but I need to adjust the underlying shape as it has about 300 points on it that are quite tedious to move around. phoenixvault_new_logo_thin.svg phoenixvault_new_logo.svg

-

Take this, table it. Proposals are part of the decentralized apario-reader, but with the introduction of the $APARIO token, the details of what Proposals are will change with the tokenomics. That being said, the idea of Reputation is to 1) be required to earn it and 2) use it to contribute to the common good. When leveraging the $APARIO token, there is the market/free trade aspect and there is the mission/purpose aspect. They are not mutually exclusively to each other. The market/free trade can be used for the supply of tokens to apario-reader service providers in a demand driven nature of supplementing advertising spending for $APARIO AirDrops (more to come). However, Proposals are the way the community will maintain the integrity of the network and contribute upstream to the apario-reader.

-

Since this post was TO BE CONTINUED, much has passed since this was initially announced and I have spent a lot of time reflecting on the ideas presented here. This community forum is the development center of the $APARIO and decentralized PhoenixVault; so anybody who wishes to get in the action, can do so by becoming a member of the forums, forking the repositories on GitHub or GitLab and submitting your pull request. These forums are designed to help onboard you to the technicalities involved in the project. Since the $APARIO token was launched on the XRP Ledger, I feel like that I should use that for the central authority to the Apario License Manager, rather than relying on my own database that I have to maintain. The GPG signing key will still be used, but I will update the process accordingly since the idea of the application is to truly run in a decentralized manner; and binding a license server to it with a /valet endpoint seems to be a misguided communication on my part. I want to clarify it. When a new instance of the apario-reader is going to get launched and connected to the XRP Ledger, the administrator of the instance will be required to do a few things before they can fully leverage their instance. This is going to be a new NFT that will get minted when new instances of $APARIO connected instances of apario-reader are introduced. This connects the disconnected. Since each instance of apario-reader only represent a small fraction of global OSINT, you may end up doing research on 10 instances across 10 domains of interest. When using the decentralized apario-reader on the $APARIO token, you'll be able to leverage the /valet search service to conduct searches across the decentralized network itself, thus leveraging the XRP addresses of who owns the NFTs thus knowing the total strength of the decentralized network. The minting process will involve a fee of some type. I am unsure what I want to charge for it, but most of the work that I am doing is in the form of charity, and the payment would be to conduct transactions on the XRP Ledger itself in preparation for your instance. I am going to call the XRP wallet that must be connected to the apario-reader itself the Instance Wallet. What will happen, when an apario-reader is connected to the XRP Ledger, a button will show on the interface with Connect Wallet that will present a QR Code for you to scan with the Xaman app or the Sologenic app. Then, your XRP wallet address will show up in the instance and your balance of XRP and APARIO tokens. If you haven't set the APARIO TrustLine yet, it'll guide you to setting it up. It'll require 0.2 XRP in order to reserve the APARIO TrustLine on your wallet in order to receive APARIO tokens. Currently we have over 540 TrustLines connected providing over 116 reserved XRP for the token. Like the reserve balance being reduced from 10 XRP to 1 XRP for new accounts, so too will you see the 0.2 XRP reserve fee for TrustLines changed in the future. Ripple won't like having a 0.2 XRP reserve fee on a TrustLine when the cost of XRP is $589 and your spending $117.80 to hold tokens of any kind of the XRP Ledger; this will change. Currently its 0.2 XRP when its $2.29 XRP; thus only $0.458 to add the $APARIO TrustLine. Nevertheless, you'll need to be on the $APARIO TrustLine in order to use the Connect Wallet functionality. When new instances of apario-reader are created, an NFT is going to be minted for your instance. It'll include some information such as: The URL of the instance. All URLs will be https://*.apario.app where the * is your instanceName. The XRP Wallet address that will issue $APARIO tokens on the instance. The name of the collection; such as "Minnesota Election Selections" or "DIA Project Stargate" or "JFK Assassination Files" A description of the collection (up to 255 characters). Contact Email Address (will be signed in the XRP ledger - for long term use) Your ED25519 Public Signing Key used by your instance Your GnuPG Public Encryption Key used by your instance X/Twitter Username Attestation under penalty of perjury that all conduct conducted on the instance will be lawful under the United States Constitution in the form of a digital signature signed with your ED25519 Public Signing Key. In order for the Connect Wallet service to interact with the Xaman app itself, you'll need to provide to the config.yaml your own Xaman Developer Console API Key and API Secret in order to generate signed QR Codes that the Xaman app can scan and use for transactions. When connecting your apario-reader application to the XRP Ledger itself, you'll need to provide the application with a wallet address and secret family seed. This is required in order for the apario-reader to send $APARIO tokens to XRP Wallets that have the APARIO TrustLine established. Then, when transactions take place on your instance, such an NFT is minted for proposal or an award of $APARIO is transferred, the XRP Balance of the Instance Wallet is used; therefore when a new instance is being setup, the 369 RLUSD when converted into XRP (at $2.29 XRP) comes out to around 161.135371 XRP. 25% (92.25 RLUSD as XRP) will be transferred to the Instance Wallet 25% (92.25 RLUSD as XRP) will be deposited into the APARIO/XRP AMM Pool as the APARIO asset 25% (92.25 RLUSD as XRP) will be deposited into the APARIO/XRP AMM Pool as the XRP asset 25% (92.25 RLUSD as XRP) will be used to buy 3M APARIO and transfer to the Instance Wallet, remainder stays on the apario.xrp wallet for future developmental use. Regardless of the competitive nature of the APARIO token, if 92.25 RLUSD isn't enough to acquire 3M APARIO tokens, by virtue of this transaction, I'll provide the difference in what the market cannot provide for the 92.25 until my supply of 170M APARIO have been expelled. That should provide me the means to spot 56 instances when the time comes. Because there are 699M APARIO tokens in circulation, you can divide 3M tokens per instance by the 699M and you get 233 instances of Apario Reader in the decentralized network. When we get to 233 instances of the apario-reader connected to the $APARIO token, the trading price of $APARIO will be much higher than 1 XRP ⇄ 1.3M APARIO ; and as such, these numbers will change. Just as the XRP Wallet Reserve Balance changed from 10 XRP to 1 XRP, so too will, down the road, the 3M initial APARIO tokens change down to 369K APARIO tokens; bringing the number of instances close to 1K. Currently we have 3. We'll need another 230 before we even have this conversation. I have no idea what the APARIO token will cost when we have 230 instances online... let alone 233. When we have 2,000 instances of apario-reader, the $APARIO token will be in demand and the fractional economy behind the XRP Ledger will empower wallets of any size to connect to the network; however in the beginning, as we're getting the network online, we're going to keep with the figures that I've come up with. Unless you have a good reason otherwise. Thus, to conclude this post on the LICENSE component of the apario-reader, it'll leverage the XRP Ledger itself to validate the license. It'll use the NFT to verify ownership and issuance from the apario.xrp wallet. I'll use the initial 369 RLUSD fee to fund the AMM liquidity pool for your instance, seed your instance with XRP and APARIO tokens, and grant your license by signing your NFT's JSON payload with my GPG Private Key associated issued to license@apario.app. The apario-reader application will be updated accordingly to verify the license. If the instance is booted with an invalid license, it'd mean that the NFT owned by the account does not match the domain name issued in the config.yaml. The issued NFT has properties that must be set in the config.yaml in order for the NFT to work. As it stands, the on-boarding process for the apario-reader and apario-writer are exceptionally high at the moment, and that's because it's a one man shop at the moment. I've been working with Erik on simplifying this process, and I've been working on automation behind the scenes to help the heavy lifting of managing multiple deployments of the Apario Reader in the decentralized infrastructure. Remember, as OSINT comes in and out of relevancy, so too will new instances of Apario Reader that have connected XRP rewards with them in the form of APARIO tokens. Since the apario-reader application can mint NFTs on behalf of your Instance Wallet, the interface will allow your visitors to support you. I am designing this service so there can be 233 copies of Andrei for 2025 as we needed in 2020 with PhoenixVault but had only 1. This is where YOU come in. And to anyone telling me or you that I am only doing this for money can go pound sand. The thousands of hours that I've spend building this isn't for money, its for transparency in truth. Multiple it out. 233x 369 RLUSD = 85,977 RLUSD ; from that, 21,494.25 RLUSD goes directly into 233x Instance Wallets. 42,988.50 RLUSD gets deposited into the APARIO/XRP AMM Liquidity Pool. The remaining 21,494.25 RLUSD is used for acquiring 699M APARIO tokens. Whatever remains from the remaining 25% stays in the apario.xrp wallet for future development. That's not about money. That's about enabling me with reasonable resources used appropriately for your growth. Thanks for your support in the project!

-

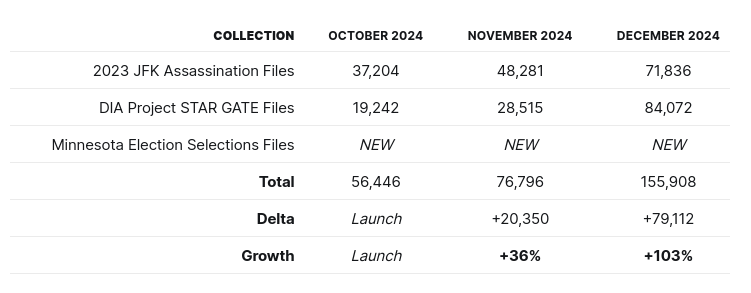

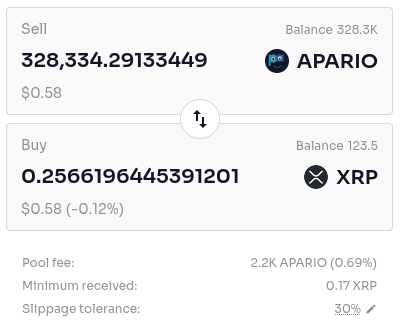

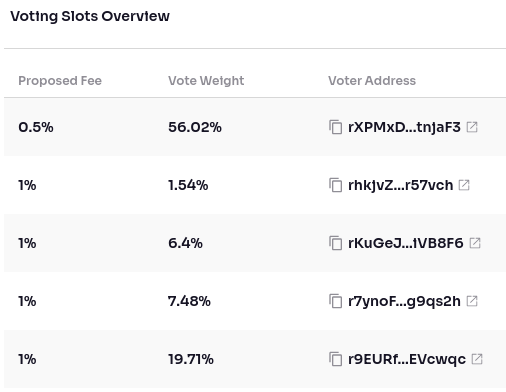

In February 2023, the Project Apario service was discontinued as a proof-of-concept (PoC) Software-as-a-Service (SaaS) Ruby on Rails (RoR) web application. This decision was not made lightly and nor was it easy to make. The SaaS RoR PoC was burdensome to maintain and costly to operate in 2020. The project faced Government Ordered Censorship as a result of choosing to release the JFK Assassination Files first. There was significant demand for the JFK Assassination Files that were released on the service, and that was powered by a 12x bare-metal server private cloud infrastructure that was costing me around $6,000 per month to operate. In September of 2020, the name Project Apario was codified in the PhoenixVault idea, and while the Phoenix may have died in the ashes of the JFK Assassination Files once again being censored by the United States Government and its counterparts. I took the 12x bare-metal server infrastructure and optimized it down to a 6x bare-metal server cluster and removed all third-party SaaS dependencies from the topology. This reduced the OpEx of the service by $3,000 per month to $3,000 per month. This was much more manageable. The enormous generosity of the community also supported and funded most of this services' expenses, and so while you help me with paying for the servers, I help you by writing the software and releasing the source code open source. In February 2023 though, I was faced with a significant decision. The financial support provided to the project through voluntary contributions were less than 33% of the OpEx required to operate the servers, even at half capacity, half-cost and an optimized code-base provided double speeds over the 2020 release of the platform. The project was still not in the right direction. Being a single person who has been behind the project since its inception in August 2019, under the name of Crowdsourcing Declas (unreleased by name as it was under development until PhoenixVault launched as the platform under a rebrand). I needed to replace the technology stack of the entire project. I couldn't support the old tech stack at the same time as building the new tech stack. I had to discontinue the project as it stood. It couldn't return as it was. Ever. That being said, the Phoenix rises from the ashes. 2020, we thought that President Trump was going to get re-elected and we wouldn't be stuck with Joe Biden for 4 years and cackling Kamala Harris. In 2020, we thought that we were uniting with Q for the finale of the Great Awakening. Maybe you thought that. I was extremely busy building the PhoenixVault software. It's been 4 years. I needed to learn Go, and not just learn Go, but really grok it. I am still learning it. It's a complex language capable of doing a lot. I work on this project part time. I spend my discretionary disposable income on the project. It costs me around $1200/month currently to run everything as it stands and the Raven Squad Army is supporting it with $15/month in support. Thank you for the support that you're continuing to show me. I am still extremely busy on the software and the code and my work can be seen on GitHub as its getting released. For instance, I recently released go-passwd and go-checkfs on GitHub. These two packages are tiny, but pack a powerful punch. The go-passwd tool provides a safe way to audit a string before accepting it as a password. The go-checkfs provides an easy way to check.File and check.Directory by providing the path to either and the file.Options{} or directory.Options{}. One line provides you the ability to check for write permissions, ownership, read-only permissions, etc. Another package released was the verbose on GitHub. This provides me additional logging capabilities within Go, such as Tracef and TracefReturn and all normal *log.Logger methods like Printf and Println are wrapped around Sanitize and Scrub methods that use a SecretsManager to keep track of potential secrets being logged out into your log files. The Tracef related commands provide a stack trace of the thrown error message's call before creation, and the TraceReturn related methods will log a stack trace of the error, but return the error as an error structure for re-use throughout the application; but when the error is passed into the return of the func, a stack trace of the error is captured alongside the message of the error. Last year I released the configurable package on GitHub, alongside the go-textee, go-gematria, and go-sema. All of these packages, while on their own may not seem to be connected to PhoenixVault or Project Apario, and thus why would you continue to sow seeds into my efforts? Well, these packages are dependencies to the apario-reader and apario-writer and in 2019 when I chose to use RoR for the PoC, it was because thats what was familiar to me and what had all of the dependencies that I needed. Go on the other hand is a much different technology stack and very different from Ruby on Rails. Therefore, in order to get the RoR PoC PhoenixVault out of my control and into your hands, it needed to be re-architected from the ground-up so it wouldn't cost you $6,000/month to operate. Now, a site like ElectionSelections.com can operate for $48/month! So, yeah, I could have kept with what was and become irrelevant, or I could adapt to the time and innovate a solution out of the problem I found myself stuck. On December 17th the RLUSD stable-coin was released by Ripple, the company behind XRP. RLUSD will drive XRP like USDT drove Bitcoin (BTC) but without excessive fees like USDC / USDT. From personal experience, the Ethereum network would look something like this. I sent $100 USD ⇄ USDT (Tether) ⇄ ETH and I'd receive $66-$72 worth of ETH after paying $28-$33 in fees. With XRP, the $100 USD ⇄ RLUSD ⇄ XRP results in receiving $99.99 in XRP after paying $0.01 in fees. On December 17th 2024, the $APARIO token was AirDropped to 434 XRP Wallets that set the $APARIO TrustLine by reserving 0.2 XRP. 86.6 XRP was reserved for the launch of the $APARIO token and the value of the token has reached a Market Cap of $1,268 in the first week since being launched! There are currently 529 TrustLines, increasing the demand of the token by an additional 95 XRP Wallets adding another 19 XRP leaving 105.6 XRP in the $APARIO Reserves. The APARIO/XRP AMM Pool is providing over 451 XRP of liquidity. The APARIO/PHNIX AMM Pool is providing over 93 XRP of liquidity. The APARIO/GOPHER AMM Pool is providing over 136 XRP of liquidity. Finally, the APARIO/YEShua AMM Pool is providing almost another 17 XRP of liquidity. In total, there is over 697 XRP worth of $APARIO in AMM Liquidity circulating pools. At the current market rate of XRP, at $2.29; this results in $1,596.13 of circulating liquidity. These liquidity pools are provided to you by me for a 0.693% transfer fee on the AMM Pool itself. When you use the AMM pool to swap your APARIO tokens into XRP tokens, the pool will incur a fee of 0.693%. This fee is a result of a calculation performed on a signed transactions on the AMM Pool itself setting the pool transfer fee. The average of the vote weight + proposed fee is calculated upon changes to the ledger and the current fee is 0.693% as a result of the above table. The growth of the apario-reader while having no marketing, no social media campaigns, no outreach, etc. - has been growing month over month strongly even after being offline for 17 months between the discontinued PoC RoR PhoenixVault and the truly open-source decentralized apario-reader. 2025 will see the direct integration of the $APARIO token into the apario-reader and receive a re-brand and a new-logo under the name of PhoenixVault. The future is going to be amazing! Do you HODL $APARIO tokens?

-

I am very pleased to announce the release of Project Minnesota's instance of apario-reader. https://electionselections.com/ (can be read as Election Selections or Elections Elections or Elections Squared) Erik will be formally announcing it once we connect, but I am so darn excited. It's like its Christmas morning. Oh wait. Merry Christmas everyone. The BEST is yet to come!

-

Hard to believe that the apario-reader application was built in 2 weeks last Christmas, and here I am on Christmas day the next year deploying a new instance of the project for Erik and Project Minnesota. Excited to get $XRP integrated into it and motivate tens of thousands of people to red pill themselves on election integrity! Thank you for everyone who has continued to show support for this project, I love and appreciate you.

-

- apario-reader

- decentralized

-

(and 4 more)

Tagged with:

-

Thank You Yeshua For Ceausescu Justice And Liberation Day!

Andrei commented on Andrei's event in Community Calendar

Thank you, Yeshua for such an incredible day with family. Thank you for watching over me. Thank you for protecting me. Thank you for helping me with Project Apario. Thank you for saving me. Let's help the world together by building what I am building here. Help me, Yeshua. Thank you! -

Project Apario appears to be a multifaceted initiative with various components and objectives, based on the search results provided: Overview: Origin: Founded by Andrei Merlescu, Project Apario seems to have started as a platform or tool focused on OSINT (Open-Source Intelligence) research, particularly related to government records and transparency. Purpose: It was initially aimed at assisting with research into the JFK Assassination Records, providing a platform for grassroots supporters to access and analyze declassified government documents. Over time, it evolved or was intended to evolve into a tool for broader political transparency, news, and commentary. Key Elements: Technology: PhoenixVault: Described as a proof of concept software focused on handling declassified records, particularly those related to the JFK assassination. Apario Reader and Writer: Components of what seems to be a decentralized approach to managing government public domain records, aiming to democratize access to information. Raven Squad Army: An initiative or community associated with Project Apario, possibly for rallying support or participation in this decentralized information warfare landscape. Business and Legal Structure: Project Apario LLC: A legal entity registered in New Hampshire, operating from Washington, DC, which suggests a business structure to support the project's activities or development. Public Engagement: There were efforts to fund the project through platforms like Ko-fi, indicating crowdfunding or supporter-driven development. The project had a presence on various platforms including Telegram, YouTube, and SoundCloud, indicating an attempt to engage with a wide audience. Status and Evolution: Discontinuation: By March 31, 2023, Project Apario was announced to be concluding, with statements from its founder that it would not return. This suggests either a pivot in focus or the end of this particular initiative. There's also a mention of an "official offline" status with no return planned, metaphorically likened to a ship sinking, indicating a dramatic end or shift. Cultural and Ideological Aspects: The project seems to have ties to libertarian and transparency ideologies, aiming to challenge mainstream media narratives by providing alternative or direct access to information. Public Perception and Impact: Community: There were followers and an audience, as seen from the engagement on platforms like Ko-fi, where people could support the project financially. Criticism or Challenges: The project encountered issues like government-ordered censorship, suggesting it faced resistance or legal challenges in its pursuit of transparency. Legacy: While the project itself might have ended, its concepts and the technology developed (like PhoenixVault) could be seen as contributing to discussions on government transparency and information access. Conclusion: Project Apario was an ambitious project with a mission to increase transparency and access to governmental records, particularly through technology that could empower individuals to bypass traditional information gatekeepers. Despite its apparent cessation, the ideas and tech developed might continue to influence similar initiatives or be picked up by others in the field of information freedom and transparency.

-

Per the request of @Alien display names can now be changed on the forum boards. The default of the software is to disallow them until a policy has been established. Below are the instructions on how you can take advantage of this service if you need. First, up at the top you'll want to click on your username. I signed into your account @Alien using the Admin CP feature of Invision Community and from this drop down menu, if you click on Profile, it'll bring you to this page... If you click on Change you'll be brought to the edit form: As you can see, 7 display name changes are permitted once you've been registered for 14 days. Your limit resets after 144 days. Thank you!

-

- 1

-